To Learn. By definition, learning is known simply as; knowledge which is attained through study, training, practice or the act of being taught.

Every intelligent organism has been proven to learn. Some beings evolve to communicate with one another. Others, show the potential to transmit knowledge of different species. However, the ability to learn and communicate at a heightened skill level, is indeed what makes us human.

The proficiency to learn from our mistakes and our past, through experimentation and deduction, all while analyzing information is what leads to the acquisition of new skills, more in-depth knowledge, and overall understanding.

Though human nature allows for repeat mistakes, the ability to develop our learning leads to the opportunity of achieving more significant results. With such results come new inventions and drives progress in all facets of our lives. Moreover, through all of our faults and shortcomings, the pursuit of knowledge and the development of our learning is why we have become the advanced species that we are today.

History

Computational models began in the very early 1950’s. Arthur Samuel, an American pioneer in the field of computer gaming and Artificial Intelligence, coined the term “Machine Learning” in 1959. It was then that the concept of Machine Learning began to form from a theory into a reality. The goal for Machine Learning is, and always has been, is for a machine to achieve the ability to learn based on multiple different types of data, and ultimately make predictions, provide expert answers, or guesses without actually being programmed explicitly on how to do so. A machine’s solutions are based on its acquired knowledge: not predetermined by code. Machine learning, and therefore Artificial Intelligence, are concepts meant to handle the recognition, understanding, and analyzation of data. These concepts have been evolving rapidly over time. In most cases, the technological limitations have been the bottleneck for more advanced progression. Until recently, Machine Learning (more specifically its fraction called “Deep Learning”) would take advantage of easily obtained and affordable computational infrastructures which could deal with parallel processing of complex mathematical tasks through the use of advanced algorithms.

These developments returned limited results. As a result, a so-called “AI Winter” set in, where the industry went through a period of reduced funding and interest in Artificial Intelligence. The term, originating in 1984 as the topic of a public debate began due to severe cutbacks in funding, followed by the end of serious research. The interest in Machine Learning began to pick up in early 2000,’s with the creation of the Torch software library (2002) and ImageNet (2009). The opposite of an “AI Winter,” an “AI Spring” began to emerge in early 2010. Now, in 2018, we are turning the page on what could be a global industry surge with AI and Machine Learning.

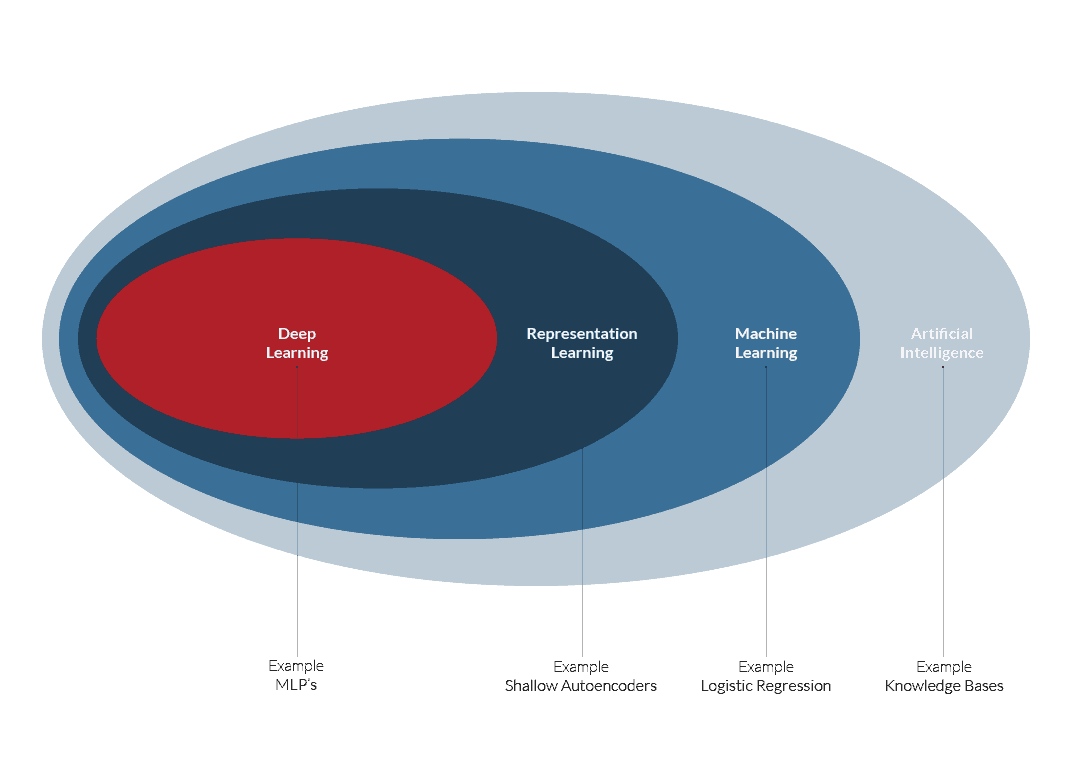

It’s worth explaining the traditional hierarchy of these systems: Deep Learning is a subset of Machine Learning, and Machine Learning is a subset of Artificial Intelligence.

Primarily, the progress of traditional AI (i.e., knowledge-based, rule-based systems, such as the Deep Blue chess program) came to a halt until large amounts of digitized data and computational power became available over the last ten years. This has enabled computing-intensive algorithms to learn from data. That’s when ML and DL began to shine and became positioned to revolutionize how things work in many industries.

Machine Learning vs. Artificial Intelligence vs. Deep Learning

Industry

Currently, Artificial Intelligence, Machine Learning, and Deep Learning are beginning to provide a competitive edge in the following industries markets:

Health Care

Health care is one of the largest fields where tremendous amounts of knowledge, data, variables, statistics, and unknowns and managed. However, instead of just data, the health and well-being of ourselves and our loved ones are at stake. In response to such high stakes, the medical field is, and always will be, one of the most important industries for AI applications to show and prove its worth.

The analysis of seemingly insurmountably-sized data sets and countless records to identify trends and tendencies, based on limitless variations of medical records, disease patterns, genetic tendencies, etc., is precisely the benefit to having a well-trained and adaptable AI system. Consider analyzing the human genome to discover cures to illnesses or to accelerate life-saving discoveries. These real-world applications are but a few examples of use-case scenarios that medicine will be able to offer us within the very near future.

While many of these applications are in continuous development, helping doctors and researchers alike, we are still in the very early stages of discovering and harvesting AI’s full potential in this field. With unceasing efforts from scientists, developers and hardware manufacturers, we break new performance barriers at a rapid rate. GPU manufacturers such as NVIDIA and Intel empower the industry with the hardware building blocks necessary to achieve better, faster and more precise results that will lead to exponential improvements in our human health, livelihood, and overall well-being for the world as a whole.

Robotics

From logistics to the military, or to manufacturing, robotics play a tremendous role in today’s supply chain industry. Advanced levels in automation have already been achieved with the use of Deep Learning applications. A robots’ ability to build, manipulate, categorize, sort, and move objects has become a cornerstone of the modern manufacturing industry. Additionally, the use of airborne and land-based drones and autonomous vehicles are used in rescue operations, the military, public safety and security, transport, entertainment, agriculture, and even medicine. The combined use of Artificial Intelligence with Robotics is finding its way into more industries every day.

Space exploration is another crucial industry that relies on the use of AI and robotics. Interestingly enough, though NASA is not a branch of the US military, they are an independent branch of the government. Therefore, many technologies, some involving robotics, are in use long before they become part of public knowledge. The intersection of science, technology, industry, and power has always been prevalent, and there is no better example than space exploration.

Marketing

Deep Machine Learning is genuinely changing the game for the marketing industry. Marketers strive to engage, entice, and educate their audience. Deep Learning applicability is virtually endless. It allows for not just clearer understanding and target recognition; it also creates unique and personal engagements based through behavioral patterns and creating timely opportunities. By identifying the ideal clients, to determining the opportune purchase moment of a customer, the applicability of market trends through GPU Machine Learning can disrupt markets and industries. Deep Learning, already, is one of the most significant examples of technology to maximize opportunities and drastically improve costs efficiency for business’. The Ad-tech industry, for example, utilizes Deep Learning for predictions and real-time bidding to recognize and maximize opportunities and radically improve cost efficiencies.

Retail

The retail industry has embraced AI for years. From tracking stock levels to monitoring foot traffic, sizeable computational data is increasingly vital to an industry that often struggles to stay afloat. Consider the future of retail for a moment. Imagine: a densely distributed systems of cameras installed throughout a store, combined with AI image recognition and specific scanning abilities could allow registered customers to pick up items and leave the store without the need to visit a store register. Purchased would automatically be billed to a customer’s credit card upon leaving.

There are many retailers experimenting with this type of AI application for long-term cost savings and unmatched customer convenience. There is also a strategy for customer retention, product placement for foot traffic, and accessibility.

E-Commerce Use of Artifical Intelligence

From chatbots to suggesting new products based on previous purchases or products you’ve researched Artificial Intelligence and Deep Learning is everywhere in the e-commerce industry. These technologies create a personal touch with a customer and enable digital tracking for the marketer. Additionally, recognizing and predicting patterns in consumer behavior has been revolutionary for industries such as airlines and hospitality. Consider how quickly prices can change and adapt based off of events or pattern fluctuations. This information is tailored through detailed AI to maximize both productivity and company profit.

Cybersecurity & Machine Learning

From security penetration testing and monitoring to detection and prevention, Machine Learning and Deep Learning in cyber threat protection’s are growing exponentially. Deep Learning is primarily utilized for identifying anomalies and malicious behavior, such as bad actors or those that intend to harm. It can detect a threat in the early stages or, in an ideal world, prevent an attack from occurring entirely. Well-designed DL algorithms and solutions assist security experts in assessing risks and aid in narrowing their focus from looking at potentially thousands of incidents to analyzing the most aggressive attacks. DL algorithms often provide a visual representation for more comprehensive and quicker analysis. From discovering, predicting, and preventing DDoS attacks to network and system intrusions, DL has become the cornerstone of many reliable tools that SOC teams are utilizing daily.

Driverless Vehicles

Major players in the ride-share community, such as Uber and Lyft, have long understood the importance of driverless vehicles to expand their platform. Even tech giants such as Google and Apple are entertaining the idea of driverless technology with Google’s Waymo program and Apple’s (admittedly secret) “Project Titan” making recent headlines. Naturally, major auto manufacturers are dipping their toe into the water as well, with automation enhancements like Tesla’s “Autopilot” features. Striving for flawless autonomy in transport is a lofty goal, but one many are eagerly attempting.

Deep Learning and Inference are at the core of this industry, and while it has its multitude of challenges, ranging from technological impediments to government regulation, the fact that we will be surrounded by self-driving vehicles for personal and industrial purposes is unavoidable. While we may not be a fully autonomous transport society yet, a smart, self-driving automated future will be the reality in the years to come.

Human Resources and Recruitment Industries

HR is a prime example of when AI and DL can aid an industry that is overloaded. Companies such as LinkedIn and GlassDoor have been utilizing this technology for years. Scanning profiles for specialized skills, industry experiences, activities, location, and even competitor experience is nothing shy of necessary in the modern world.

The days of handing over a CV to be peer-reviewed entirely are all but gone. Now, algorithms will have a much better picture of your experience and personality, based not just on your CV, but also on your social media and online presence combined with behavioral patterns of your profile (age, gender, location, etc.). Your interests, as well as theoretical predictions of your next move (do you change jobs often), are compared to other potential candidates for a likelihood of being hired.

As intrusive as this may initially appear, automation and algorithms have a distinct advantage of bypassing any personal preferences and biases from recruiters themselves. DL and AI will ultimately run the future of HR.

Financial Industry

Fraud detection/prevention is some of the most relevant and public-facing risks that the banking and payment providers face. Predicting economic and business growth opportunities to make and/or suggest sound investment advice that minimizes risk is aided by GPU Deep Learning and Artificial Intelligence platforms. Having a system that continually monitors the entire industry for pattern-building trends is not only essential, but it is also impossible to do on a human scale. Companies that utilize AI and DL technologies tremendously increase their chances to disrupt established market incumbents.

Weather, Climate and Seismic Data

From weather pattern predictions, advanced storm modeling, and even disaster prevention, Artificial Intelligence plays an integral role in the weather industry. Constant monitoring of potential network threats can create countermeasures so that people can evacuate an area in the face of natural disasters such as hurricanes, tornadoes, earthquakes or even wildfires and flooding.

Accurately predicting a natural disaster or the potential destruction of urban infrastructure or crops can allow investment firms and stock market players to maximize their profits and minimize their risk with the correctly placed (or avoided) investment. Additionally, the oil and gas industry is benefiting from the use of AI in underground resources distribution, pocket sizes, and locations allowing for maximized efficiencies, but also minimizing environmental impact.

Big Data

Data mining, statistical analytics, and forecasting have incredible applications in various markets and are becoming increasingly crucial in business and political decision-making. The tremendous amount of data that is being collected is useful not just for AI, and it’s prediction algorithms, but having the ability to access historical and real-time information at an immediate rate allows decision makers, in all fields, to make more informed and calculated choices (personal human biases aside).

While AI can assist with prediction and pattern-recognition with large datasets, humans are ultimately responsible for final decision-making choices and as such, are liable for all consequences. With the assistance of a well-trained AI algorithm, those consequences are becoming less and less.

Enter GPU Machine Learning

Groundbreaking technological advancement for the Machine and Deep Learning industry was developed not long ago. The Graphics Processing Unit or GPU Server was created. Most CPUs work effectively at managing complex computational tasks, but from the performance and financial perspective, CPUs are not ideal for Deep Learning tasks where thousands of cores are needed to work on simple calculations in parallel. The application of general purpose CPUs tends to be cost-prohibitive for such purposes, and this is where the development of the GPU came from.

Initially developed for image rendering and manipulating purposes, developers realized they could use GPUs for other tasks due to the nature of their design and their massive ability for parallel processing. With thousands of simple cores per single GPU, these components quickly became the foundation for today’s Deep Learning applications.

Deep Learning Training

Deep Learning Neural Networks are becoming continuously more complex. The number of layers and neurons in a Neural Network is growing significantly, which lowers productivity and increases costs. Deep Learning deployments leveraging GPUs drastically reduce the size of the hardware deployments, increase scalability, dramatically reduce the training and ROI times and lower the overall deployment TCO.

Deep Learning Inference

Trained Neural Networks are deployed for inference in production environments. Their task is to recognize spoken words, images, predict patterns etc. Just like during training, speed is of utmost importance especially when a workload deals with “live predictions.” Besides processing speeds; throughput, latency, and network reliability also play a vital role. Deployment of GPUs in the cloud is the solution.

Developers, data scientists and hardware manufacturers jumped on this opportunity, with NVIDIA leading the charge on the hardware side. Now, because of companies such as NVIDIA, there is a developed software ecosystem supporting a wide range of Deep Learning frameworks, applications and libraries. GPUs shine with the ability to harness multiple processing cores and scale to handle tremendous amounts of simultaneous instructions. General purpose CPUs still make a more in-depth impact in the AI segment for being cost-effective, so they are easy to distribute as components for Inference and to support GPUs in network and storage parts of computational tasks. Graphics Processing Units are genuinely the hi-tech leaders to support today’s massive and continuously rising demand for infrastructure-parallel processing capabilities.

From Artificial Intelligence, Machine Learning, Deep Learning, Big Data manipulation, 3D rendering, and even streaming, the requirement for high-performance GPUs is unquestionable. With companies such as NVIDIA, valued at over $6.9B, the demand for technologically powerful compute-platforms is increasing at record pace. Additionally, the projected Deep Learning market is valued at $3.1B for 2018 and expected to increase to over $18B by 2023.

Many deep neural networks are deployed with new Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN) on a regular basis. This makes it nearly impossible and imprudent naming them all. Though we don’t have the opportunity to interact with every network that is built, there are some very real use-cases where we do interact with on a daily basis. Facial recognition, natural language processing, and voice recognition are some of the most identifiable Deep Learning applications.

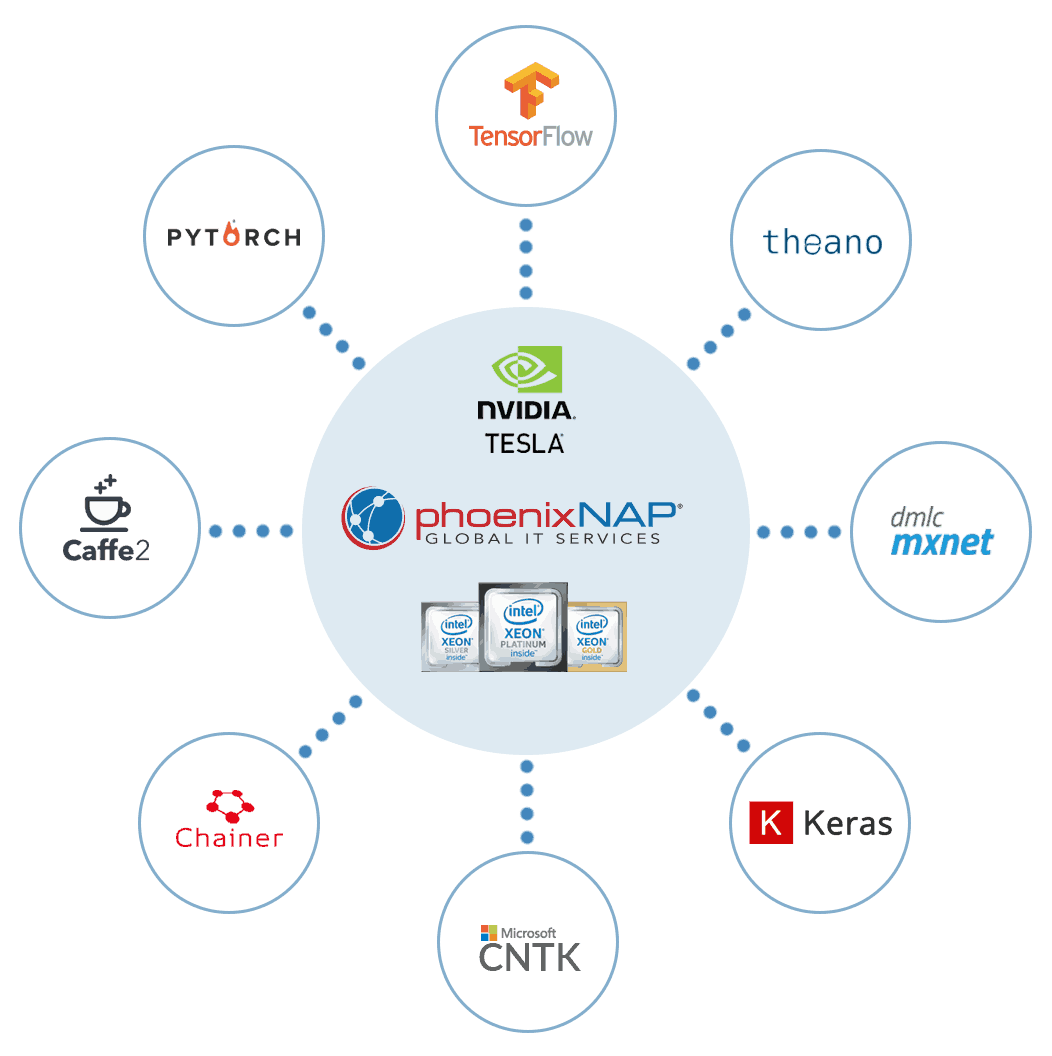

CNN’s tend to be engaged in image related tasks, and RNN’s are more versed in voice and language patterns. However, there are many additional Deep Learning frameworks which tend to be more suitable for different variations of tasks. The most popular frameworks include:

- TensorFlow – one of the most popular DL frameworks today and excellent for speech recognition, text classification, and other NLP applications. TensorFlow works with CPU’s and GPU’s alike.

- Keras– great for text generation, classification, speech recognition, and suitable to run on GPU and CPU alike, uses Python API. Keras is often installed with Tensorflow.

- Chainer – best suited for speech recognition and machine translation, supports CNN’s and DNN’s alike, as well as NVIDIA CUDA and multi GPU nodes. Chainer is Python-based.

- Pytorch – primarily used for machine translation, text generation, and Natural Language Processing tasks, archives great performance on GPU infrastructure.

- Theano – suitable for text classification, speech recognition, but predominantly this framework allows for creating new Machine Learning models and uses a Python library.

- Caffe/Caffe2 – prized for its performance and speed, primarily used for CNN’s image recognition purposes. It uses a Python interface and has many pre-trained models ready to deploy without writing any code with Model Zoo. Caffe/Caffe2 works well with GPU’s and scales well.

- CNTK – works well with CNN and RNN types for respective image and speech recognition as well as text-based data. It supports a Python interface and offers excellent scalability across multiple nodes and offers exceptional performance.

- MXNET – supports multiple programming languages and CNN + RNN networks being very suitable for image, speech and NLP tasks, and is very scalable and GPU focused.

Visit our Knowledge Base for tutorials on the listed frameworks. Some of the tutorials include:

Conclusion: GPU Machine Learning and AI

If current interests (read: funding) remain at the same level, and the robot uprising doesn’t throw off investors for a few years, we will only see more advancement within the field.

The human brain is a very creative and capable organ, and mimicking it through a combination of software and hardware is not particularly easy. However, we as a species will continue advancing our technology, improving our neural networks, developing new Deep Learning techniques, produce more capable and powerful software, and continue working on the ultimate goal of creating a world where computers can help us advance faster, understand better and learn quicker.

Will this ultimately lead us to the development of singularity? Likely not, but we will surely find out eventually. For now, Artificial Intelligence and Deep Learning create tremendous market opportunities on a global scale and are aimed at improving our lives and the world around us. It is not just redefining industries and markets, but its applications are life changing.